5/22/2014

OSX, sharemouse

I'm a big user of InputDirector, use to use Synergy.. now I'm a subscriber to Sharemouse.

Just a quick note on my thoughts here:

1. Free is great

2. Reliable is better

3. Supported is best

I've got nothing against opensource and sharing ideas, but a polished product is worth money, and one that has a road map and delivers on that roadmap, deserves a customer.

Sharemouse is a local lan KVM solution for [bi-directionally] sharing multiple keyboards and mice between multiple attached monitors. With a cut an paste buffer and cut and paste file transfer ability.

Its put out by the same people who offer MaxiVista, which is an IP monitor sharing tool for presentations and extending a desktop.

The licensing seems fair, free is two node and two monitor, standard includes additional nodes and features, professional offers the most nodes and unlimited attached monitors.

It is a lan solution, its not webtop sharing solution.

But it will also protect traffic with a shared password.

One of the coolest things is you can "donglize it" by putting your license key on a portable flash drive and there by "move" it from home to office and back and forth.

You can install sharemouse everywhere, only one device needs to hold a license, and only those nodes sharing a password can connect to each other.

It doesn't support Linux (sad) but Linux supports RDP so well these days if you need a desktop for a Linux server OS.. you can get that, but for the most part I use Linux as a server host and not a lot of GUI apps.

Drag and drop of files from windows to osx and back works, as does cut an paste of files.

5/19/2014

OSX, extracting active IP and MAC

A bash one liner to extract some useful info

Lists all the interfaces as input to a bash for-each loop, then looks at the ifconfig output for each interface to find an "active" interface, then parses the ifconfig output (again) for the same interface looking for a key, then outputs only the value for that key.

Messy, but useful

I like to think of it as [horizontal] programming as opposed to [vertical] programming.

JMBP13M2012:~ jwillis$ for i in `ifconfig -l` ; do x=$(ifconfig "$i") && x=${x#*status: } && echo ${x%% *} | grep ^active > /dev/null && y=$(ifconfig $i) && y=${y#*inet } && echo ${y%% *} ; done

192.168.2.107

JMBP13M2012:~ jwillis$ for i in `ifconfig -l` ; do x=$(ifconfig "$i") && x=${x#*status: } && echo ${x%% *} | grep ^active > /dev/null && y=$(ifconfig $i) && y=${y#*ether } && echo ${y%% *} ; done

10:dd:b1:e0:1b:4a

sw_vers -productVersion

10.8.5

sw_vers -buildVersion

12F45

x=$(sysctl -a | grep kern.boottime | head -n 1) && x=${x#*= } && echo ${x%% * }

Sun May 18 23:45:53 2014

5/15/2014

P2V, Windows 2008r2 to KVM

Capturing a [live] Windows system while it is running has become trivial with Volume Shadow Copy and Disk2vhd. As long as the Volume Shadow Copy is checked it can even store the captured volume images on one of the disks that is "itself " being captured.

The only restrictions being that VHD is specifically intended for use with Windows 2008r2 or earlier and has a final VHD size limit of 127 GB. That does not not mean the total potential storage space of its contents must be less than 127 GB. You [can] capture a 149 GB disk or partition as long as the resulting VHD does not end up more than 127 GB VHD size limit.

VHDx is specifically for Windows 2012 or above, but Windows 2012 also has tools for backwards converting a VHDx to a VHD as long the resulting VHD does not end up more the 127 GB VHD size limit. VHDx supports up to 64 TB volumes, but partitioning schemes and file systems inside those volumes may have to be divided up into, smaller sizes than the total Volume limiting them something to less than 64 TB. For example an MBR is practically limited to 2 TB. A GPT has larger size limits. But the file system put into those partitions may have their own size limitations.

Once it is in the VHD format, converting it to a RAW image which KVM can use will require a tool.

The traditional qemu-img tool "could be used"

qemu-img

convert -f vpc -O raw \/kvm/images/disk/disk.vhd \/kvm/images/disk/disk.img however earlier editions of this tool had bugs when working with large VHD files which can result in unusable RAW files.. If you use qemu-img to perform the format conversion, be sure to test the RAW format volume as soon after the conversion as possible to avoid potential data loss from assuming the volume is usable.

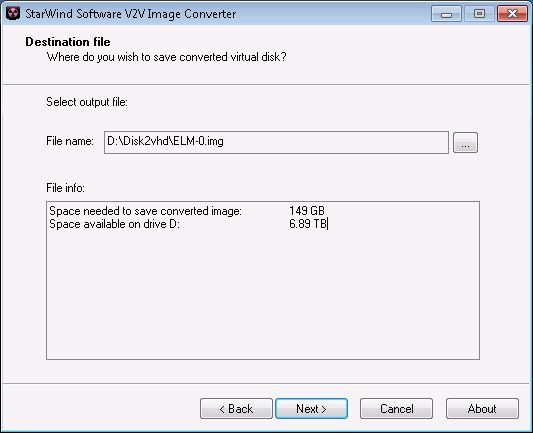

Another tool is the free Starwind V2V image Converter

it actually supports many formats, and helpfully calculates the resulting image size after a conversion. (remember that 127 GB VHD size limit? this tool will warn you if you cross it)

One thing to be "aware of " is that VPC - or "Virtual PC" is a synonym for the VHD file format. So wherever you see Virtual PC mentioned they are referring to the VHD format when speaking about storage.

note: this tool does not support the VHDx format

RAW volumes are not growable or expandable and converting to this format will result in a larger on disk file system object than the original.

Once this is done getting the raw image file from the Windows system to a Linux host or storage server can be done from Windows 2008r2 by installing the [Services for Network File System] [Role].

And the RSAT [Service for Network File System Tools][Feature]

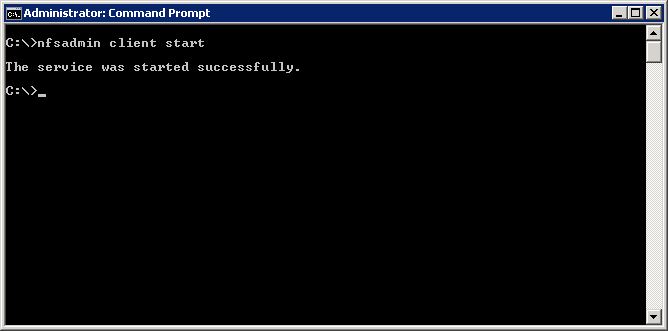

Then "Start" the NFS client service

Then "Mount" the Linux NFS share

And copy the raw image file from the Windows system to the Linux host or storage system

Additional details might include setting up the Linux exports to

1. allow the IP address of the Windows server to mount the exported share

2. allow no_root_squash such that an anonymous user can read write from that Windows server

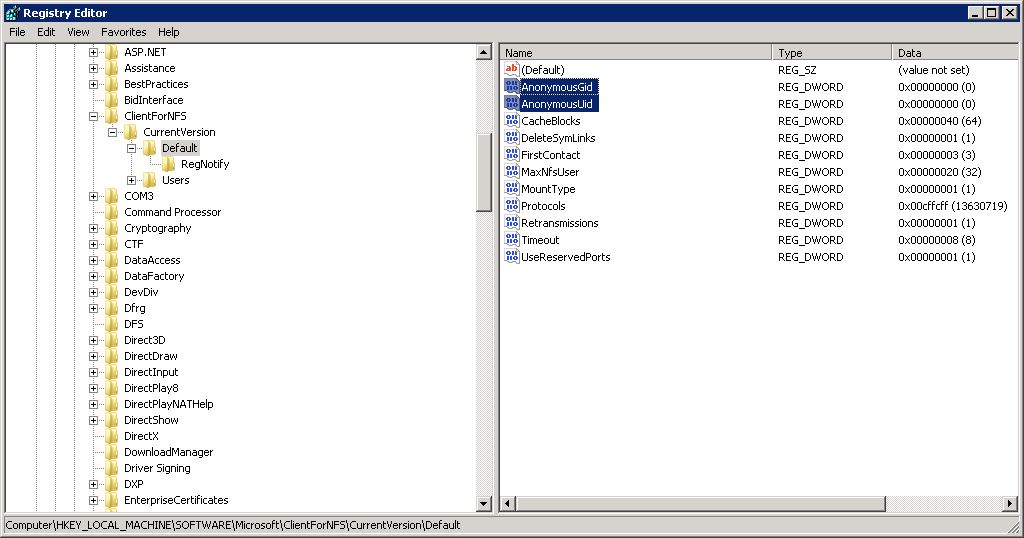

3. Set a Windows registry setting to map the Windows "pseudo UID and GID" for the Anonymous user if not using AD user mapping (it defaults to -2 and -2, somewhat like Mr. Nobody and the Root Squash problems.. but enough about errant vegetables..)

4. if your Linux KVM system is using the luci and rcci clustering solutions, you will need to login to the luci web control and configuration for the cluster - then add a new nfs client "resource" - then add that newly created "nfs client resource" as a [child] to your cluster "nfsexports" service

5. any "Error 53" messages from Windows will indicate "path not found or resolved" which - might be true - but its the bit bucket for errors, it could mean many things, like simply "Access Denied" because the exports file does not have a pattern to grant access to the Windows server IP address, or the permissions for Anonymous mounting from that IP address, or the UID/GID is unknown - any problems reading/writing after mounting are likely due to no_root_squash or the automatic assumption that UID/GID "0" should be treated at the 65536 "nobody" user with few permissions (there are not a lot of logs or diagnostics for nfs on the Windows or Linux, since Linux will often default to "NFS3" and uses UDP which isn't very chatty about problems.. "NFS4" has even more advanced "issues" but might offer a performance boost if you have the experience to get it working properly, changing up the read/write buffers on the mount options from Windows for the nfs mount might be a more efficient use of time. You can also capture the traffic with wireshark and let it decode the nfs data packets to gleen useful information from the nfs protocol error messages.

Subscribe to:

Posts (Atom)