During processing the images may also be read with character recognition software to create keyword content that represents the image content. This means images can be inspected and recognized as containing language text which can be used to replace the image content in a new image-less document format, or used as a keyword map to find coordinates in the image of the page.

Finally images are bound into an electronic doc, like an ebook.

These are the steps to transforming a real book into an ebook.

A couple things to consider:

Choosing an image scanner and a lighting environment and scanning hundreds of pages per session can be a time consuming and difficult task.

Choices for the scanner are many but may be limited by available budget.

The Czur ET16 scanner is one scanner that costs less than many images scanners. It includes a lighting system, hand/foot controls to remote trigger an image scan (and autoscan which can detect when a page has been turned and automatically trigger a new image scan) and includes software to perform post processing, optical character recognition and binding into portable document format (PDF) ebooks.

It arrives as a kit that requires some assembly. The software is downloaded from the internet and installed to a Windows PC. During installation the software is activated using an activation key attached to the bottom of the scanner. The Windows PC is connected to the scanner by a USB cable and turned on. The Windows PC recognizes the scanner and completes device setup.

Scanning a book can take considerable time. It may require more than one session. Each session has an associated date and time when it began. The software tracks the date and time when each session begins by creating a new folder on the file system for each session labeled with the date and time the session began.

To scan a book one begins by setting aside a block of time in which they are less likely to be interrupted. The Windows PC and scanner are moved to a darkened room with lighting less likely to interfere with the light provided by the scanner for capturing images. The background mat is placed below the imaging sensor and lined up with the edges of the scanner.

The Windows PC is relieved of extra running processes and the Czur software is started. A USB cable is connected between the Windows PC and the scanner. A choice is made to use the hand or foot switch ( or to rely on autoscan ) and if necessary is attached to the scanner. The scanner is switched on.

After choosing to Scan rather than to Present.

After choosing to Scan rather than to Present.

The Windows PC running the Czur software will display the 'work table' for performing post processing, optical character recognition and binding. Before these tasks can be initiated a Scanning session must be performed.

The upper left menu [Scan] button is selected and a 'sub application' is started that searches for a scanner connected by USB cable to the Windows PC. A live 'field of view' is presented in the center of the screen which shows what the scanner can see.

The right shows the current directory listing of the current 'date and time' session folder.

The book to be scanned is placed in the scanners 'field of view'.

Scanning a book cover will not require holding it in place. Scanning the interior pages of a book may require holding the book open using fingers or hands. The scanner will automatically process an image with color drop-key to remove fingers or hands wearing colored drop-key covers while holding a book in place. Color drop-key removal will only be performed for fingers or hands found to the left, right or below the page content.

When a scanning session is complete the [X] in the Upper Right is clicked to close the 'sub application' this will bring all of the scanned images to the work table and populate the Right navigation panel with their names.

As each image was scanned it was copied to the PC and given a filename. As each filename arrives on the PC it is "processed" based on the "profile" that was selected for the scanning session. A 'pro-file' defines how to 'process-files' for a session.

A work table full of scan images can immediately be bound into a PDF or TIFF file by going to the Lower-Right and pressing [all] to 'Select all' images. Then going to the Upper-Left and pressing 'Archive' and selecting [as PDF] or [as TIFF]. A request for a destination and filename will appear and create the 'Archive' document.

PDF and TIFF files are 'multi-page' documents which can be viewed in a PDF viewer like the Chrome browser, or an Fax viewing program.

While the above description is the simplest workflow.

There are many variable settings, controls and tools which can be used at each step to attain greater control over the overall process and final output.

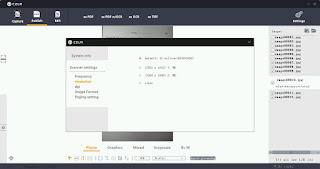

Global settings such as Scanned Image resolution, Image file format, dpi and Left/Right page splitting order are chosen from the Upper Right [Settings - Gear] icon.

Session settings such as Color Mode (a combination of Brightness, Contrast and Sharpness) are selected from within the Scan 'sub application' to the Upper-Right just before scanning. And they can be tweaked somewhat by disabling [auto] exposure and manually moving the slider control underneath the preview image.

Session settings such as picking a Profile (a combination of deskew, autocrop, dewarp, page-split or none) are selected from within the Scan 'sub application' to the Lower-Right just before scanning. Tweaking is performed upon the image files on the work table after 'sub application' is closed.

When working on images on the work table, a single image can be tweaked at a time with the controls underneath the selected file image. Multiple images can be tweaked in batch sequence by selecting all the images making up a batch group and then prototyping the changes to be applied to all of the images in the batch group from the [Bulk] menu option.

Archiving allows creating PDF or TIFF files, or OCR doc file and optionally combining those with PDF files to create a variant of the standard PDF format. These PDF variant files can include a keyword index that maps to locations within the image pages in the PDF file. This makes the PDF image document keyword 'searchable'.

The end result of a scanning session is always a multi-page Archive PDF or TIFF. The scanned images from a session are removed to ensure space is available for the next session.

Scanning a book cover will not require holding it in place. Scanning the interior pages of a book may require holding the book open using fingers or hands. The scanner will automatically process an image with color drop-key to remove fingers or hands wearing colored drop-key covers while holding a book in place. Color drop-key removal will only be performed for fingers or hands found to the left, right or below the page content.

When a scanning session is complete the [X] in the Upper Right is clicked to close the 'sub application' this will bring all of the scanned images to the work table and populate the Right navigation panel with their names.

As each image was scanned it was copied to the PC and given a filename. As each filename arrives on the PC it is "processed" based on the "profile" that was selected for the scanning session. A 'pro-file' defines how to 'process-files' for a session.

A work table full of scan images can immediately be bound into a PDF or TIFF file by going to the Lower-Right and pressing [all] to 'Select all' images. Then going to the Upper-Left and pressing 'Archive' and selecting [as PDF] or [as TIFF]. A request for a destination and filename will appear and create the 'Archive' document.

PDF and TIFF files are 'multi-page' documents which can be viewed in a PDF viewer like the Chrome browser, or an Fax viewing program.

While the above description is the simplest workflow.

There are many variable settings, controls and tools which can be used at each step to attain greater control over the overall process and final output.

Global settings such as Scanned Image resolution, Image file format, dpi and Left/Right page splitting order are chosen from the Upper Right [Settings - Gear] icon.

Session settings such as Color Mode (a combination of Brightness, Contrast and Sharpness) are selected from within the Scan 'sub application' to the Upper-Right just before scanning. And they can be tweaked somewhat by disabling [auto] exposure and manually moving the slider control underneath the preview image.

Session settings such as picking a Profile (a combination of deskew, autocrop, dewarp, page-split or none) are selected from within the Scan 'sub application' to the Lower-Right just before scanning. Tweaking is performed upon the image files on the work table after 'sub application' is closed.

When working on images on the work table, a single image can be tweaked at a time with the controls underneath the selected file image. Multiple images can be tweaked in batch sequence by selecting all the images making up a batch group and then prototyping the changes to be applied to all of the images in the batch group from the [Bulk] menu option.

Archiving allows creating PDF or TIFF files, or OCR doc file and optionally combining those with PDF files to create a variant of the standard PDF format. These PDF variant files can include a keyword index that maps to locations within the image pages in the PDF file. This makes the PDF image document keyword 'searchable'.

The end result of a scanning session is always a multi-page Archive PDF or TIFF. The scanned images from a session are removed to ensure space is available for the next session.